TL;DR

Mixture-of-Sparse-Attention (MoA) compresses attention in LLMs, so that they compute short attention, but remember long context.

🎉 Introducing Mixture-of-Sparse-Attention (MoA) - our new method for compressing attention in LLMs!

🚀 Achieves 6.6−8.2x faster throughput than dense FlashAttention2.

🎯 Improves retrieval accuracy by 1.5-7.1x compared to uniform sparse attention.

🤗 Easy to use with our automatic compression pipeline - deploy in just a few lines of code!

Abstract

Sparse attention can effectively mitigate the significant memory and throughput demands of Large Language Models (LLMs) in long contexts. Existing methods typically employ a uniform sparse attention mask, applying the same sparse pattern across different attention heads and input lengths. However, this approach fails to capture the diverse attention patterns inherent in LLMs, ignoring their distinct accuracy-latency trade-offs.

To address this challenge, we propose the Mixture of Attention (MoA), which automatically tailors distinct sparse attention configurations to different heads and layers. MoA constructs and navigates a search space of various attention patterns and their scaling rules relative to input sequence lengths. It profiles the model, evaluates potential configurations, and pinpoints the optimal sparse attention compression plan. MoA adapts to varying input sizes, revealing that some attention heads expand their focus to accommodate longer sequences, while others consistently concentrate on fixed-length local contexts.

Experiments show that MoA increases the effective context length by 3.9x with the same average attention span, boosting retrieval accuracy by 1.5-7.1x over the uniform-attention baseline across Vicuna-{7B,13B}, and Llama3-{8B,70B} models. Moreover, MoA narrows the capability gaps between sparse and dense models, reducing the maximum relative performance drop from 9%-36% to within 5% across two long-context understanding benchmarks. MoA achieves a 1.2-1.4x GPU memory reduction, boosting decode throughput by 6.6-8.2x and 1.7-1.9x compared to FlashAttention2 and vLLM, with minimal impact on performance.

Observation

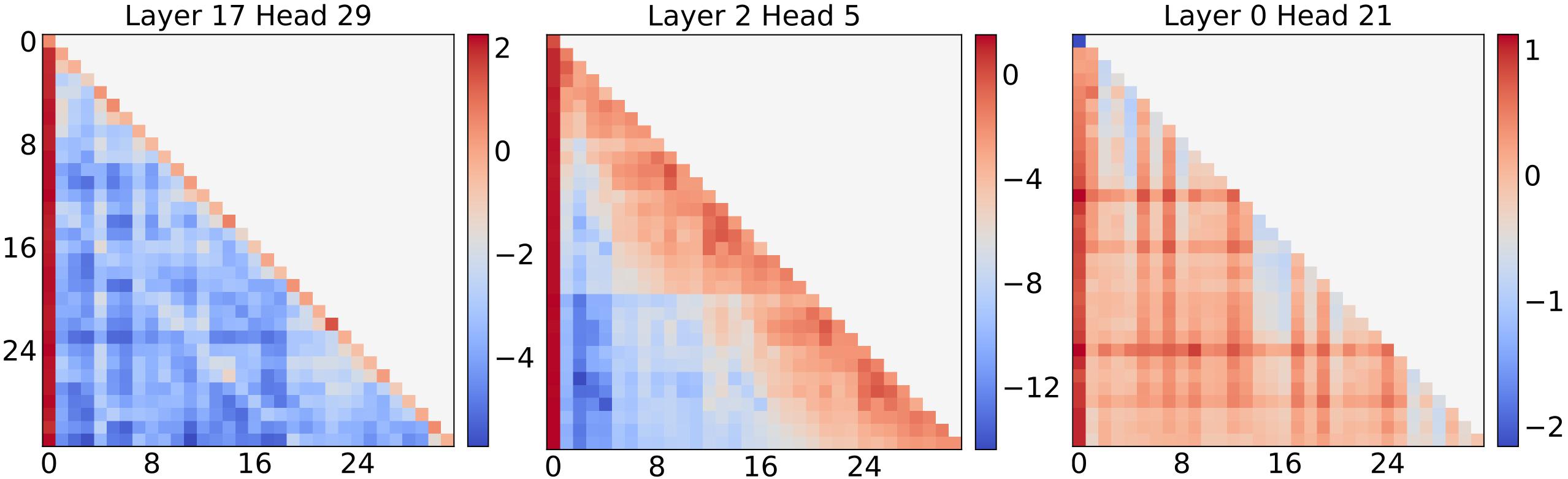

Heterogeneous Attention Patterns

Different attention heads in LLMs exhibit heterogeneous attention patterns. In the figure above, the first head primarily focuses on local contexts with a narrow-span sliding window, while the third head covers nearly the entire input, indicating global attention.

Heterogeneous Elastic Rules

In addition to heterogeneity at a certain length, different attention heads also exhibit varying elastic behaviors as the input length changes. The figure above illustrates this variability: for shorter inputs (the upper left part of the attention matrix), the second and third heads initially show global attention. However, as input length increases, the second head remains the medium-span local focus, while the third head continues to expand as global attention.

Methodology

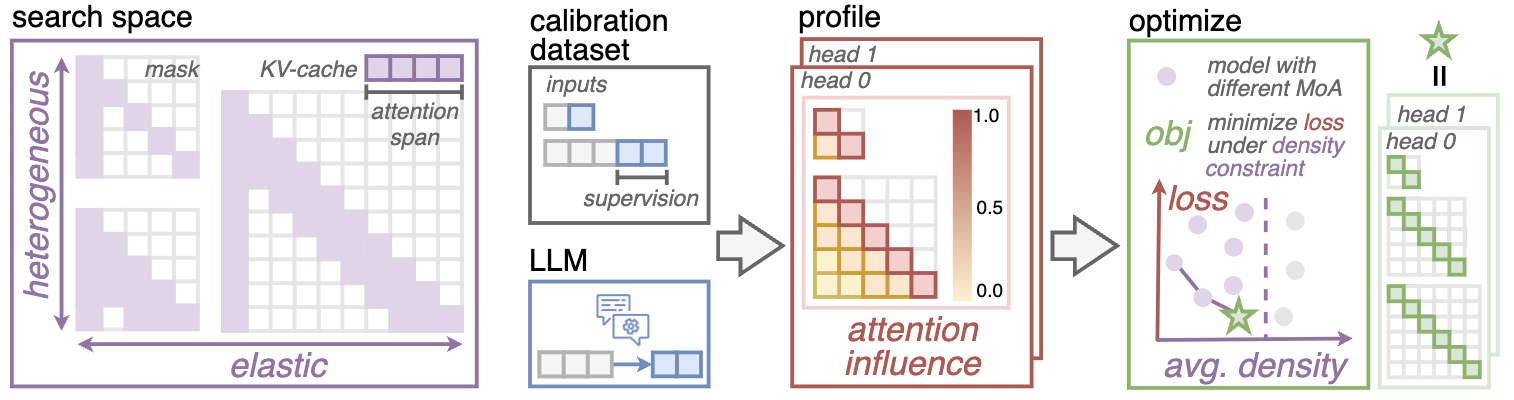

Elastic Rule Search Space

Taking into account the inherently heterogeneous and elastic nature of LLM attention patterns, MoA adopts a hardware friendly sliding window mask with initial tokens as attention sink. The attention span of head is formulated as a linear function of input length.

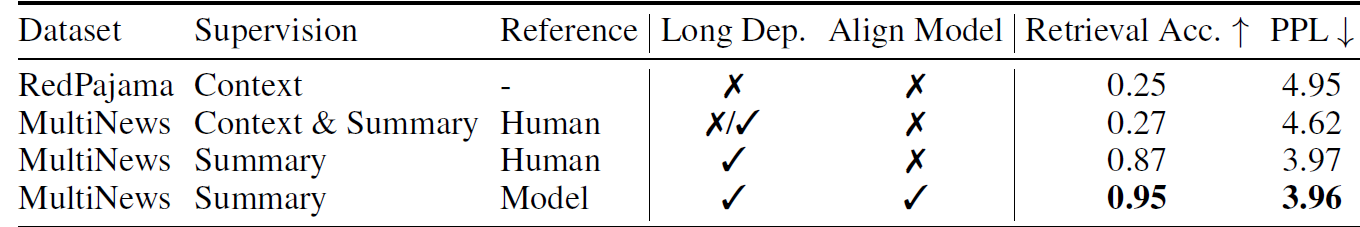

Attention Influence Profiling

MoA approximates the loss increase of masking each attention value using first-order Taylor expansion. In practice, we use backpropagation on a calibration dataset to calculate the average attention influence of each head and layer.

Our key insight is that the calibration dataset should feature long range dependency and model alignment. MoA utilizes long-contextual MultiNews dataset, calculating the loss only on the summary part and more importantly, uses the reponse of dense model instead of the ground truth answer.

Automatic Optimization

MoA automatically selects the optimal elastic rule for each attention head to minimize accuracy losses across various sequence lengths under density budgets. Based on the profiling results, MoA first identifies Pareto front compression plans where any improvement in accuracy loss at one profile length would worsen another.

To ensure the best generalization to lengths beyond those profiled, MoA then selects the plan that yields the minimum loss at an unseen length among the Pareto front solutions as the final plan.

Experiments and Analysis:

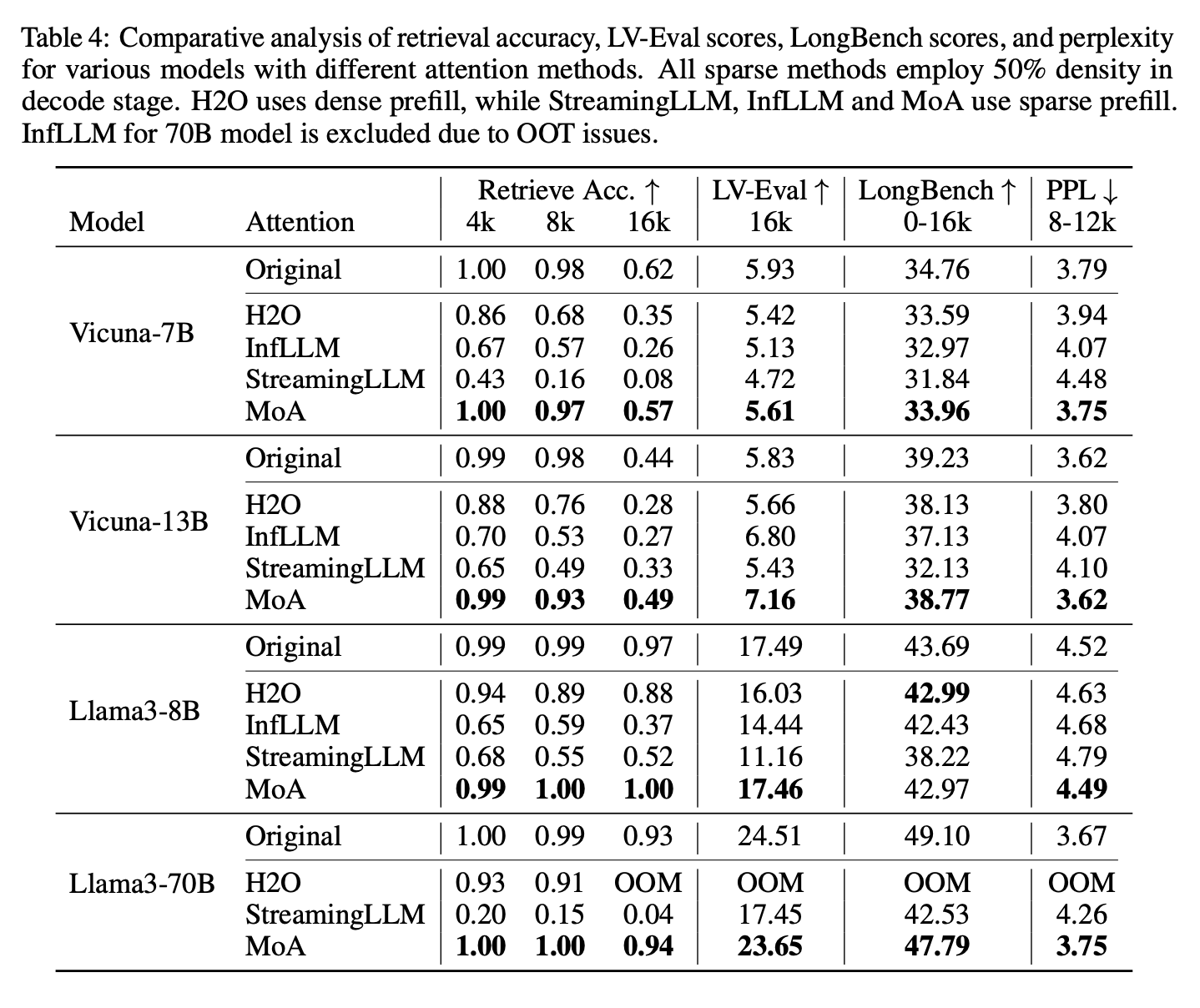

📈 Overall Performance

MoA outperforms state-of-the-art sparse attention methods and achieves comparable performance to the original dense model at 50% density. On average, MoA exhibits only a minor 1% drop in relative accuracy for retrieval tasks. Furthermore, it demonstrates a maximum relative drop of just 5% and 3% in scores across two benchmarks——significantly smaller than those observed with baseline methods.

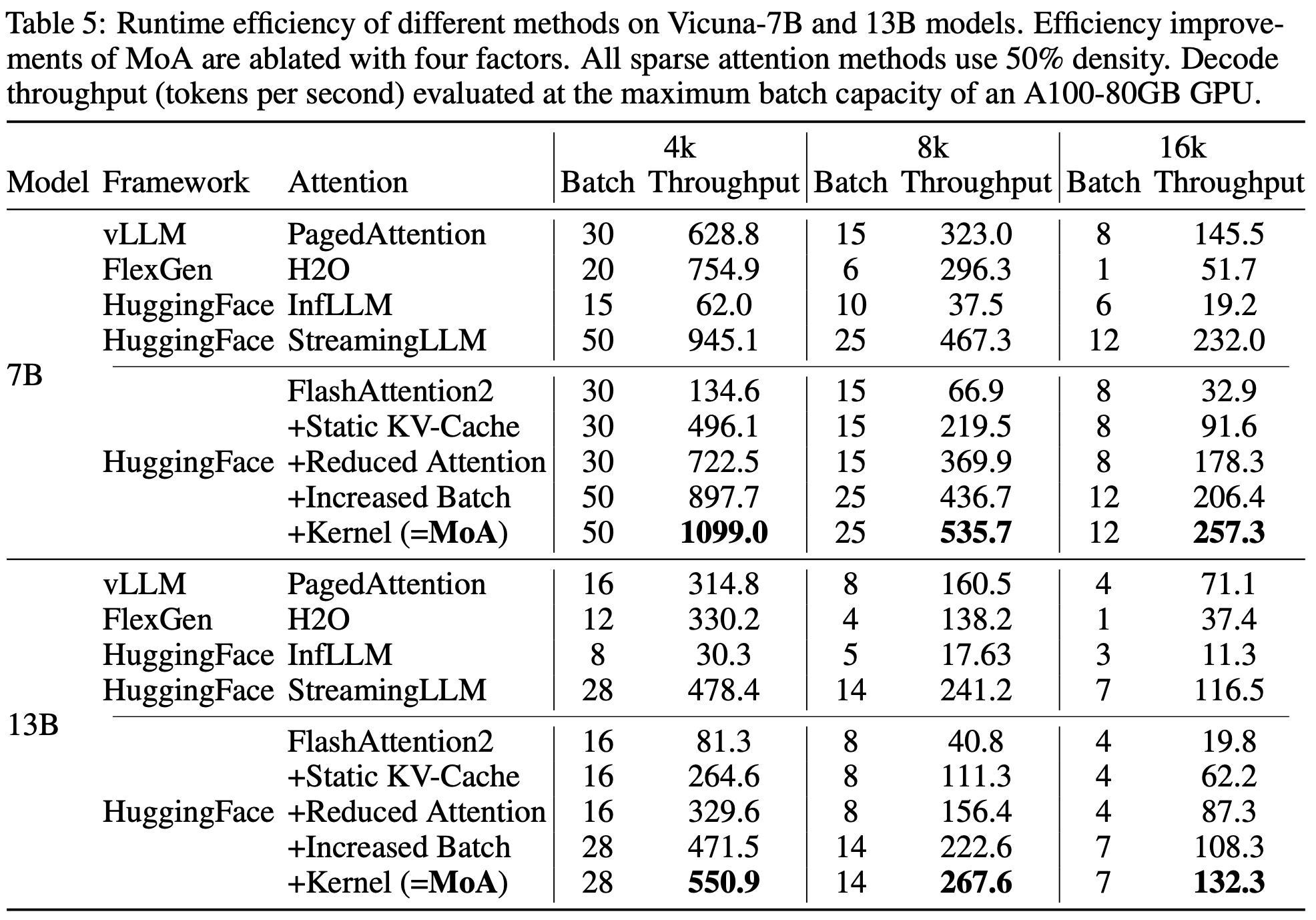

🧭 Efficiency Improvement

MoA reduces the GPU memory footprint by 1.2x-1.4x and boosts the throughput of dense models by 6.6−8.2x on a single GPU, primarily attributed to a static-size KV-Cache, reduced attention computations, increased batch size, and optimized GPU kernel.

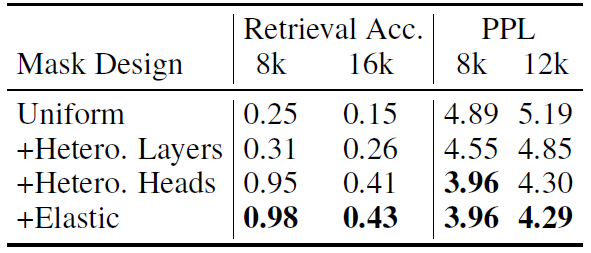

🔍 Ablation Studies

Starting with a basic uniform mask, we observe significant enhancements by sequentially introducing heterogeneity: layers first, then heads, and finally elastic rules.

Reference

@misc{fu2024moa,

title={MoA: Mixture of Sparse Attention for Automatic Large Language Model Compression},

author={Tianyu Fu and Haofeng Huang and Xuefei Ning and Genghan Zhang and Boju Chen and Tianqi Wu and Hongyi Wang and Zixiao Huang and Shiyao Li and Shengen Yan and Guohao Dai and Huazhong Yang and Yu Wang},

year={2024},

eprint={2406.14909},

archivePrefix={arXiv},

primaryClass={id='cs.LG' full_name='Machine Learning' is_active=True alt_name=None in_archive='cs' is_general=False description='Papers on all aspects of machine learning research (supervised, unsupervised, reinforcement learning, bandit problems, and so on) including also robustness, explanation, fairness, and methodology. cs.LG is also an appropriate primary category for applications of machine learning methods.'}

} MoA: Mixture of Sparse Attention for Automatic Large Language Model Compression

MoA: Mixture of Sparse Attention for Automatic Large Language Model Compression